A subset of machine learning is closely related to computational statistics, which focuses on making predictions using computers; but not all machine learning is statistical learning. The study of mathematical optimization delivers methods, theory and application domains to the field of machine learning. Data mining is a related field of study, focusing on exploratory data analysis through unsupervised learning.

Some implementations of machine learning use data and neural networks in a way that mimics the working of a biological brain. In its application across business problems, machine learning is also referred to as predictive analytics. This book covers the field of machine learning, which is the study of algorithms that allow computer programs to automatically improve through experience.

Several learning algorithms, mostlyunsupervised learningalgorithms, aim at discovering better representations of the inputs provided during training. Classical examples includeprincipal components analysisandcluster analysis. It has been argued that an intelligent machine is one that learns a representation that disentangles the underlying factors of variation that explain the observed data. Since the 2010s, advances in both machine learning algorithms and computer hardware have led to more efficient methods for training deep neural networks that contain many layers of non-linear hidden units. By 2019, graphic processing units , often with AI-specific enhancements, had displaced CPUs as the dominant method of training large-scale commercial cloud AI.

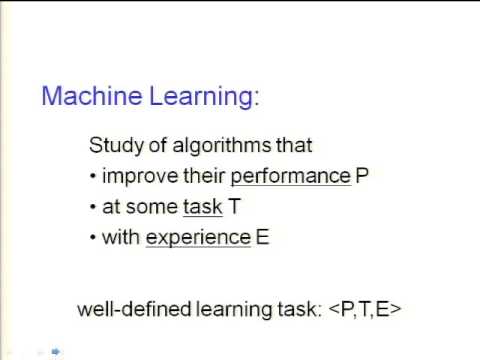

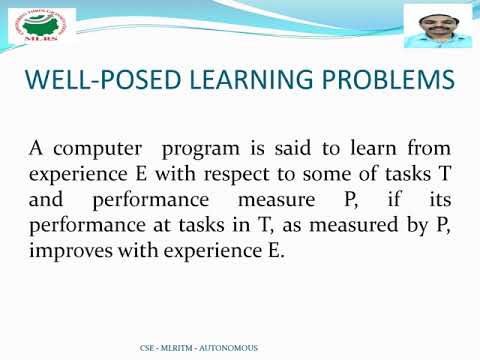

OpenAI estimated the hardware compute used in the largest deep learning projects from AlexNet to AlphaZero , and found a 300,000-fold increase in the amount of compute required, with a doubling-time trendline of 3.4 months. Machine learning is the study of computer algorithms that can improve automatically through experience and by the use of data. Machine learning algorithms build a model based on sample data, known as training data, in order to make predictions or decisions without being explicitly programmed to do so. They seek to identify a set of context-dependent rules that collectively store and apply knowledge in a piecewise manner in order to make predictions.

Deep learning is a machine learning method that relies on artificial neural networks, allowing computer systems to learn by example. In most cases, deep learning algorithms are based on information patterns found in biological nervous systems. Anartificial neural network learning algorithm, usually called "neural network" , is a learning algorithm that is inspired by the structure and functional aspects ofbiological neural networks. Computations are structured in terms of an interconnected group ofartificial neurons, processing information using aconnectionistapproach tocomputation.

Modern neural networks arenon-linearstatisticaldata modelingtools. They are usually used to model complex relationships between inputs and outputs, tofind patternsin data, or to capture the statistical structure in an unknownjoint probability distributionbetween observed variables. Machine learning is a branch of AI; it's more specific than the overall concept. Machine learning bases itself on the notion that we can build machines to learn on their own – from patterns and inferences – without constant supervision by humans.

Machine learning algorithms build a mathematical model of sample data, known as "training data", in order to make predictions or decisions without being explicitly programmed to perform the task. Support-vector machines , also known as support-vector networks, are a set of related supervised learning methods used for classification and regression. Given a set of training examples, each marked as belonging to one of two categories, an SVM training algorithm builds a model that predicts whether a new example falls into one category or the other. An SVM training algorithm is a non-probabilistic, binary, linear classifier, although methods such as Platt scaling exist to use SVM in a probabilistic classification setting. In addition to performing linear classification, SVMs can efficiently perform a non-linear classification using what is called the kernel trick, implicitly mapping their inputs into high-dimensional feature spaces.

Rule-based machine learning is a general term for any machine learning method that identifies, learns, or evolves "rules" to store, manipulate or apply knowledge. The defining characteristic of a rule-based machine learning algorithm is the identification and utilization of a set of relational rules that collectively represent the knowledge captured by the system. This is in contrast to other machine learning algorithms that commonly identify a singular model that can be universally applied to any instance in order to make a prediction.

Rule-based machine learning approaches include learning classifier systems, association rule learning, and artificial immune systems. Reinforcement learning is an area of machine learning concerned with how software agents ought to take actions in an environment so as to maximize some notion of cumulative reward. In machine learning, the environment is typically represented as a Markov decision process .

Many reinforcement learning algorithms use dynamic programming techniques. Reinforcement learning algorithms do not assume knowledge of an exact mathematical model of the MDP, and are used when exact models are infeasible. Reinforcement learning algorithms are used in autonomous vehicles or in learning to play a game against a human opponent. Unsupervised learning algorithms take a set of data that contains only inputs, and find structure in the data, like grouping or clustering of data points. The algorithms, therefore, learn from test data that has not been labeled, classified or categorized.

Instead of responding to feedback, unsupervised learning algorithms identify commonalities in the data and react based on the presence or absence of such commonalities in each new piece of data. A central application of unsupervised learning is in the field of density estimation in statistics, such as finding the probability density function. Though unsupervised learning encompasses other domains involving summarizing and explaining data features.

The computational analysis of machine learning algorithms and their performance is a branch of theoretical computer science known as computational learning theory. Because training sets are finite and the future is uncertain, learning theory usually does not yield guarantees of the performance of algorithms. Instead, probabilistic bounds on the performance are quite common. The bias–variance decomposition is one way to quantify generalization error. Machine Learning is the study of computer algorithms that improve automatically through experience. Applications range from datamining programs that discover general rules in large data sets, to information filtering systems that automatically learn users' interests.

It is written for advanced undergraduate and graduate students, and for developers and researchers in the field. No prior background in artificial intelligence or statistics is assumed. Unlike general AI, an ML algorithm does not have to be told how to interpret information. The simplest artificial neural networks consist of a single layer of machine learning algorithms . Though unsupervised learning and supervised learning are not completely formal or distinct concepts, they do help roughly categorize some of the things we do with machine learning algorithms.

Traditionally, people refer to regression, classificationand structured output problems as supervised learning. Density estimation in support of other tasks is usually considered unsupervised learning. The key idea behind the delta rule is to use gradient descent to search the hypothesis space of possible weight vectors to find the weights that best fit the training examples. This rule is important because gradient descent provides the basis for the BACKPROPAGATION algorithm, which can learn networks with many interconnected units.

It is also important because gradient descent can serve as the basis for learning algorithms that must search through hypothesis spaces containing many different types of continuously parameterized hypotheses. In general knowledge, processing systems and algorithms of machine learning are an identification mode that performs prediction by finding a hidden mode in data. The computational analysis of machine learning algorithms and their performance is a branch oftheoretical computer scienceknown ascomputational learning theory. Deep learning started to perform tasks that were impossible to do with classic rule-based programming. Decision tree learning uses a decision tree as a predictive model to go from observations about an item to conclusions about the item's target value . It is one of the predictive modeling approaches used in statistics, data mining, and machine learning.

Tree models where the target variable can take a discrete set of values are called classification trees; in these tree structures, leaves represent class labels and branches represent conjunctions of features that lead to those class labels. Decision trees where the target variable can take continuous values are called regression trees. In decision analysis, a decision tree can be used to visually and explicitly represent decisions and decision making. In data mining, a decision tree describes data, but the resulting classification tree can be an input for decision making.

In computer science, there is a specialized discipline called numerical computation. It strives to increase accuracy and efficiency when computers run various computations. For example, the famous gradient descent method and Newton method are classic numerical computation algorithms.

Neural networks are well suited to machine learning models where the number of inputs is gigantic. The computational cost of handling such a problem is just too overwhelming for the types of systems we've discussed above. As it turns out, however, neural networks can be effectively tuned using techniques that are strikingly similar to gradient descent in principle. In a given data set, we already know what the correct output should look like, having the idea that there is a relationship between input and output is how we define supervised learning. It is a spoon-feed version of machine learning algorithms where you select what kind of information samples to "feed" the algorithm and what kind of results are desired. Supervised learning algorithms are further categorized into "Regression" and "Classification" algorithms.

Several learning algorithms aim at discovering better representations of the inputs provided during training. Classic examples include principal components analysis and cluster analysis. This technique allows reconstruction of the inputs coming from the unknown data-generating distribution, while not being necessarily faithful to configurations that are implausible under that distribution. This replaces manual feature engineering, and allows a machine to both learn the features and use them to perform a specific task.

Single-layer ML systems are not efficient at working with unlabeled input. Part of this is because it requires deep neural networks to make sense of the information. Multilayer networks are more suited for this type of data handling as each layer performs a specific function with the input before passing it to another layer along with its results. Since ANNs are vastly more common than DNNs, unsupervised learning is considered a rare form of training. In supervised learning, using labelled data , supervised machine learning algorithms predict the classification of other unlabelled data. Supervised learning uses labelled data sets to train algorithms that classify data or predict outcomes accurately.

In supervised learning, a training set is used to teach your models to yield the desired output. This dataset has corrected and incorrect outputs, which allow your model to learn over time. Supervised learning helps organizations solve multiple real-world problems at scale and are best applied for spam detection, sentiment analysis, weather forecasting and pricing predictions. In other words, an unsupervised machine learning algorithm parses through data to understand its context and describe the same in human-understandable languages. It is basically used for exploring the structure of the information, extracting valuable insights, detecting patterns, and implementing this into its operation to increase efficiency.

Unsupervised learning algorithms work on the basis of "Clustering". In 2006, the media-services provider Netflix held the first "Netflix Prize" competition to find a program to better predict user preferences and improve the accuracy of its existing Cinematch movie recommendation algorithm by at least 10%. A joint team made up of researchers from AT&T Labs-Research in collaboration with the teams Big Chaos and Pragmatic Theory built an ensemble model to win the Grand Prize in 2009 for $1 million. Shortly after the prize was awarded, Netflix realized that viewers' ratings were not the best indicators of their viewing patterns ("everything is a recommendation") and they changed their recommendation engine accordingly. In 2010 The Wall Street Journal wrote about the firm Rebellion Research and their use of machine learning to predict the financial crisis. In 2012, co-founder of Sun Microsystems, Vinod Khosla, predicted that 80% of medical doctors' jobs would be lost in the next two decades to automated machine learning medical diagnostic software.

In 2014, it was reported that a machine learning algorithm had been applied in the field of art history to study fine art paintings and that it may have revealed previously unrecognized influences among artists. In 2019 Springer Nature published the first research book created using machine learning. In 2020, machine learning technology was used to help make diagnoses and aid researchers in developing a cure for COVID-19. Machine learning is recently applied to predict the green behavior of human-being. Recently, machine learning technology is also applied to optimise smartphone's performance and thermal behaviour based on the user's interaction with the phone.

An algorithm can be thought of as a set of rules/instructions that a computer programmer specifies, which a computer is able to process. Simply put, machine learning algorithms learn by experience, similar to how humans do. For example, after having seen multiple examples of an object, a compute-employing machine learning algorithm can become able to recognize that object in new, previously unseen scenarios. At orquidea, we believe that specialization is necessary for high quality. This is why we have experienced mathematicians, statisticians, and data scientists in our organization to ensure that the right person performs the right job. The core tenants of machine learning are built on mathematical prerequisites.

Additionally, statistics forms an essential part of machine learning as most learning algorithms such as Naïve Bayes, Gaussian Mixture Models and Hidden Markov models are based on probability and statistics. Our mathematicians and data scientists work with our statisticians to combine the power of technology, advanced mathematics and statistics in machine learning and data mining. In supervised feature learning, features are learned using labeled input data. Examples include artificial neural networks, multilayer perceptrons, and supervised dictionary learning.

In unsupervised feature learning, features are learned with unlabeled input data. Examples include dictionary learning, independent component analysis, autoencoders, matrix factorization and various forms of clustering. An algorithm can be thought of as a set of rules/instructions that a computer programmer specifies, which a computer can process. Machine learning and deep learning algorithms have infinite room for growth, and we're sure to see even more practical applications entering the consumer and enterprise markets in the coming decade. In fact, Forbes notes that 82 percent of marketing leaders are already adopting machine learning to improve personalization.

So, we can expect to see ML leveraged commercially in targeted advertising and personalization of services well into the future. Whether we are referring to single-layer machine learning or deep neural networks, they both require training. While some simple ML programs, also called learners, can be trained with relatively small quantities of sample information, most require copious amounts of data input to function accurately.

Input is passed through the layers, with each adding qualifiers or tags. So deep learning does not require pre-classified data to make interpretations. Machine Learning is "the study of computer programs that improve automatically with experience," according to Tom Mitchell, author of the book Machine Learning. This is an overview course that covers the fundamentals of machine learning, including classification, regression, model training, and evaluation across various algorithms. The goal of the course is to give students a foundational understanding of machine learning concepts from an intuitional and mathematical perspective.

Students will have the opportunity to exercise this material on a limited number of homework assignments and a final group project. Unsupervised learning, another type of machine learning are the family of machine learning algorithms, which are mainly used in pattern detection and descriptive modeling. These algorithms do not have output categories or labels on the data . Modern day machine learning has two objectives, one is to classify data based on models which have been developed, the other purpose is to make predictions for future outcomes based on these models.

A hypothetical algorithm specific to classifying data may use computer vision of moles coupled with supervised learning in order to train it to classify the cancerous moles. Where as, a machine learning algorithm for stock trading may inform the trader of future potential predictions. The term machine learning was coined in 1959 by Arthur Samuel, an American IBMer and pioneer in the field of computer gaming and artificial intelligence.

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.